With powerful LLMs widely available, processing millions of documents is becoming a common scenario, even for smaller projects. Regular API queries simply don’t cut it for this volume—they’re too slow and error-prone—making batch processing the obvious choice. For my recent project, I picked Gemini 2.0 Flash via Vertex AI.

But is 1M documents really that many? Consider cases like processing corporate document collections or analyzing historical records for academic research. Such tasks might include extracting keywords or creating document summaries. While a million documents may not seem daunting by today’s standards, processing that many multi-page documents can generate substantial costs—easily running into hundreds of dollars.

The power of structured data extraction #

Structured data extraction is one of my go-to approaches for bringing order to messy information. Imagine a court ruling—something I’ve been working with recently—published as a five-page document dense with legal terminology. You can prompt the model to create a concise summary, extract critical quotations, identify legal references, and output everything in a neatly formatted structure. Below I am sharing just a fraction of data extracted from the original ruling.

{

"title": "Admissibility of cassation appeal in favor of the convicted person who was sentenced to imprisonment with conditional suspension of its execution.",

"keywords_phrases": {

"key_phrases": [

"cassation appeal",

"admissibility of cassation appeal",

"imprisonment sentence",

"conditional suspension of sentence execution",

"absolute grounds for judgment reversal"

],

"keywords": [

"cassation",

"ccp",

"sentence",

"suspension",

"violation"

]

},

"abstract": {

"full_abstract": "Example summary..."

}

}

What makes this approach so valuable? Models exhibit fewer hallucinations when performing structured extraction tasks, delivering high-quality output that streamlines work for professionals. Legal experts can now easily search through documents or quickly identify connections between cases. Such structured documents can be easily analysed using traditional statistical methods through familiar tools like spreadsheets, eliminating the need for generative AI techniques for every analytical task.

Handling batch processing at scale #

For my latest project, I turned to my favourite workhorse model – Gemini 2.0 Flash. In my experience, it’s significantly more capable than GPT-4o mini or Claude 3.5 Haiku at a similar price point. The difference is especially noticeable for Polish language content, where the quality gap is clear despite comparable execution times.

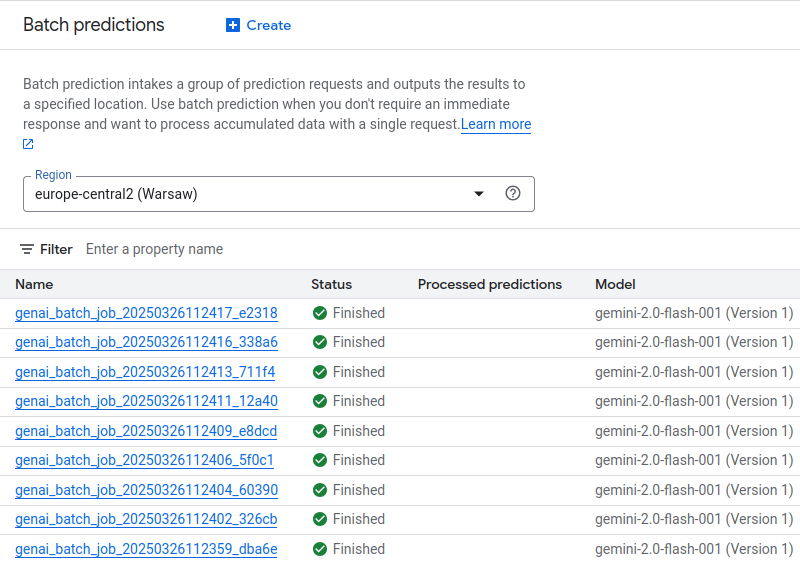

Gemini’s batch processing capabilities are available through Vertex AI, which offers generous free tiers for experimentation. Working with documents ranging from 300 to 2,000 words, I easily ran parallel processing across multiple 1,000-case batches using just the basic configuration—which could certainly be further optimized for your specific needs.

{

"case_signature": "II CSK 541/18",

"issue_date_iso": "2019-04-24T00:00:00",

"url": "https://www.sn.pl/wyszukiwanie/SitePages/orzeczenia.aspx?ItemSID=40504-57a0abe2-a73c-441d-9691-b79a0c36be5c&ListName=Orzeczenia3",

"content": {

"raw_markdown": "Sygn. akt II CSK 541/18\nPOSTANOWIENIE\nDnia 24 kwietnia 2019 r.\nSąd Najwyższy w składzie...",

"text_length": 3093

}

}

Processing workflow #

- Prepare Data: The script reads the input JSON file and splits it into multiple JSONL files with the appropriate format for batch prediction.

- Upload to GCS: The JSONL files are uploaded to Google Cloud Storage.

- Submit Batch Jobs: Batch prediction jobs are submitted to Vertex AI using the specified model.

- Monitor Progress: The script monitors job progress and waits for all jobs to complete.

- Process Results: Results are downloaded from Google Cloud Storage, processed, and saved to the output directory or a database like MongoDB.

Challenges #

With new models being released weekly, some still experimental, and multiple SDKs (Google Gen AI SDK, Vertex AI SDK) available, navigating the Google AI ecosystem takes time. Surprisingly, AI coding assistants offer limited value for this particular task. Things change so rapidly that finding relevant, up-to-date information can be challenging. Your efforts will be better rewarded by consulting official documentation, which contains the most current and accurate information.

Another obstacle is specific to structured output: while regular Python API queries let you describe your desired output structure as a Pydantic model (which is dead simple to set up and maintain), batch queries require conversion to JSON schema with stricter constraints. The standard model_dump_json() approach didn’t work for me, necessitating a custom parser for nested structured data.

Rewards #

What do you gain once you’ve cleared these hurdles? Speed, cost efficiency (half the price of regular API calls), and most importantly, reliability. Once properly configured, batch processing is far more dependable than attempting thousands of individual queries that might face server overloads or connectivity issues. Honestly, even for smaller projects involving just a few hundred or thousand API calls, the setup is worthwhile—you launch the batch, grab a coffee, and return to find your answers ready in under an hour.

One significant advantage of Gemini 2.0 Flash is its multimodal capability, enabling structured data extraction from images such as invoices or document scans. The processed batch output can be seamlessly stored in a database—I opted for MongoDB since it excels at handling unstructured documents with flexible schema, making subsequent data manipulation straightforward.

You can check out my implementation here or dive directly into Google’s documentation on batch prediction and structured output.